In the ever-evolving landscape of smart agriculture, a groundbreaking development has emerged that promises to revolutionize rice pest detection. Researchers have introduced GhostConv+CA-YOLOv8n, a lightweight deep learning model designed to tackle the persistent challenges of pest detection in real-world field environments. This innovation, detailed in a recent study published in *Frontiers in Plant Science* (translated as “植物科学前沿”), addresses critical limitations in existing models, offering a more efficient and accurate solution for farmers and agritech companies alike.

The study, led by Fei Li, introduces a novel framework that significantly enhances the detection of rice pests while reducing computational costs. Traditional deep learning models often struggle with complex backgrounds and limited resources, leading to performance degradation. GhostConv+CA-YOLOv8n overcomes these hurdles by incorporating several innovative features. “Our model replaces standard convolutional operations with GhostConv modules, which are computationally efficient and maintain feature richness,” explains Li. This modification reduces parameters by a substantial 40,458, making the model more suitable for edge deployment.

One of the standout features of GhostConv+CA-YOLOv8n is the Context Aggregation (CA) module. This module enhances low-level feature representation by fusing global and local context, which is particularly effective for detecting occluded pests in complex environments. “The CA module allows our model to better understand the context of the image, even when pests are partially hidden or obscured,” Li adds. This capability is crucial for real-world applications where pests are often difficult to detect due to varying scales and occlusions.

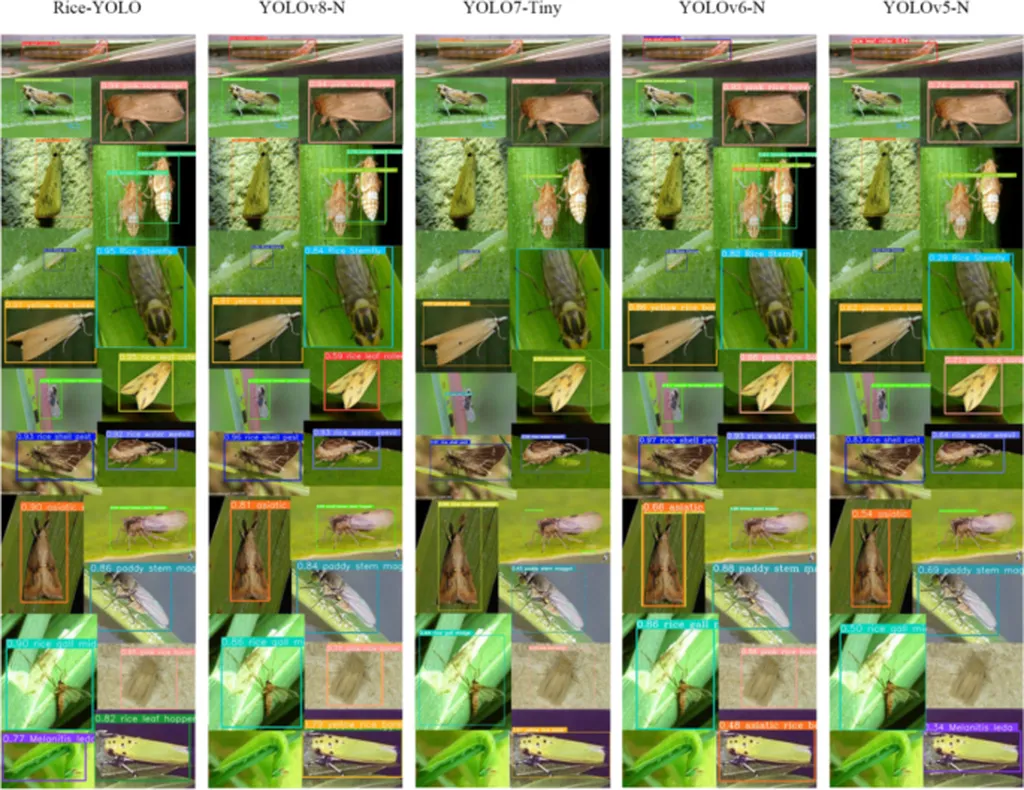

The model also employs Shape-IoU, which improves bounding box regression by accounting for target morphology, and Slide Loss, which addresses class imbalance by dynamically adjusting sample weighting during training. These enhancements contribute to the model’s impressive performance metrics. On the Ricepest15 dataset, GhostConv+CA-YOLOv8n achieves 89.959% precision and 82.258% recall, representing significant improvements over the YOLOv8n baseline. Moreover, the model maintains a high mean Average Precision (mAP) of 94.527%, compared to 84.994% for the baseline, while reducing model parameters by 1.34%.

The implications of this research extend beyond rice pest detection. The model’s efficiency and accuracy make it a valuable tool for smart agriculture systems, enabling real-time monitoring and early intervention. “This technology has the potential to transform how we approach pest management in agriculture,” Li notes. “By providing accurate and timely detection, we can help farmers reduce crop losses and improve yields, ultimately contributing to food security.”

The study also demonstrates strong generalization capabilities, achieving notable improvements in F1-score, precision, and recall on the IP102 benchmark. These results underscore the model’s versatility and potential for broader applications in the agricultural sector.

As the world continues to grapple with the challenges of climate change and food security, innovations like GhostConv+CA-YOLOv8n offer a beacon of hope. By bridging the gap between accuracy and efficiency, this research paves the way for more sustainable and productive agricultural practices. The study’s findings, published in *Frontiers in Plant Science*, highlight the importance of leveraging advanced technologies to address real-world problems, ultimately shaping the future of smart agriculture.