In the ever-evolving landscape of agriculture, the integration of technology has become a cornerstone for enhancing productivity and sustainability. A recent study published in the journal *Scientific Reports* (translated from Korean as “Scientific Reports”) introduces a groundbreaking approach to pest and disease recognition in crops, potentially revolutionizing precision agriculture. Led by Sana Parez from the Department of Software at Sejong University, the research presents a novel deep learning framework that promises to make real-time agricultural monitoring more efficient and accessible.

Traditional methods of pest recognition have long been plagued by their reliance on manual feature extraction, a process that is not only time-consuming but also lacks robustness in varying conditions. “The manual approach is labor-intensive and often inconsistent, leading to significant yield losses,” explains Parez. To address these challenges, the research team turned to deep learning models, which have shown superior performance over conventional methods. However, these models come with their own set of limitations, primarily high computational demands and large model sizes, which hinder their practical deployment in real-world agricultural settings.

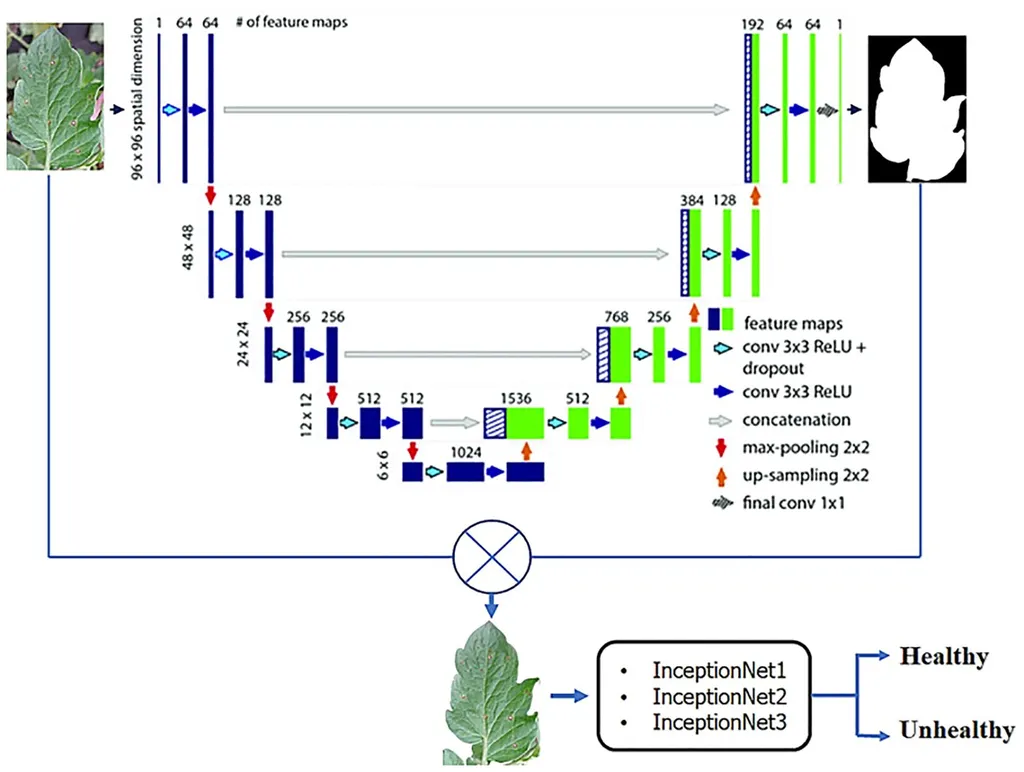

The proposed framework leverages InceptionV3 as a backbone feature extractor, capturing rich and discriminative features essential for accurate pest and disease identification. To further refine these features, the team incorporated a channel attention (CA) mechanism. “The CA mechanism helps in focusing on the most relevant features, enhancing the model’s ability to distinguish between different types of pests and diseases,” Parez elaborates.

One of the most innovative aspects of this study is the integration of a metaheuristic optimization algorithm. This algorithm significantly reduces the model’s complexity and computational overhead without compromising its performance. The result is a more efficient and deployable model that can be easily integrated into real-time agricultural monitoring systems.

The proposed model was rigorously evaluated on the CropDP-181 dataset, outperforming several state-of-the-art methods in both classification accuracy and computational efficiency. The model achieved impressive metrics, including a precision of 0.932, recall of 0.891, F1-score of 0.911, an overall accuracy of 88.50%, and an MCC of 0.816. These results underscore the model’s effectiveness and practical potential in the field.

The implications of this research are far-reaching. By enabling more accurate and efficient pest and disease recognition, farmers can take timely and targeted actions to protect their crops, ultimately leading to higher yields and reduced losses. “This technology has the potential to transform precision agriculture, making it more accessible and effective for farmers worldwide,” Parez states.

As the agricultural sector continues to embrace technological advancements, the integration of deep learning models like the one proposed by Parez and her team could pave the way for smarter, more sustainable farming practices. The research not only highlights the importance of innovation in agriculture but also underscores the critical role of technology in addressing global food security challenges.

In the broader context, this study serves as a testament to the power of interdisciplinary collaboration, combining expertise from software engineering and agricultural science to create impactful solutions. As we look to the future, the continued development and deployment of such technologies will be crucial in shaping the next generation of precision agriculture, ensuring a more resilient and productive food system for all.