In the ever-evolving landscape of agricultural technology, a groundbreaking study led by Yiqiang Sun from the China Agricultural University in Beijing is set to revolutionize how we approach pest and predator recognition in farmland ecosystems. The research, published in the journal *Sensors* (translated to English as “传感器”), introduces a novel tree-guided Transformer framework that promises to enhance the accuracy and efficiency of sensor-based image feature extraction and multitarget recognition.

Farmland ecosystems are inherently complex, with intricate pest–predator co-occurrence patterns that have long posed challenges for image-based recognition and ecological modeling. Traditional methods often fall short in capturing the nuances of these interactions, leading to less effective monitoring and management strategies. Enter Sun’s innovative framework, which leverages a hierarchical ecological taxonomy and a knowledge-augmented co-attention mechanism to extract meaningful features from sensor-acquired images.

“The key innovation here is the integration of ecological knowledge into the visual representation process,” explains Sun. “By embedding co-occurrence priors and using a hierarchical taxonomy, we can achieve a more accurate and interpretable understanding of the ecological dynamics at play.”

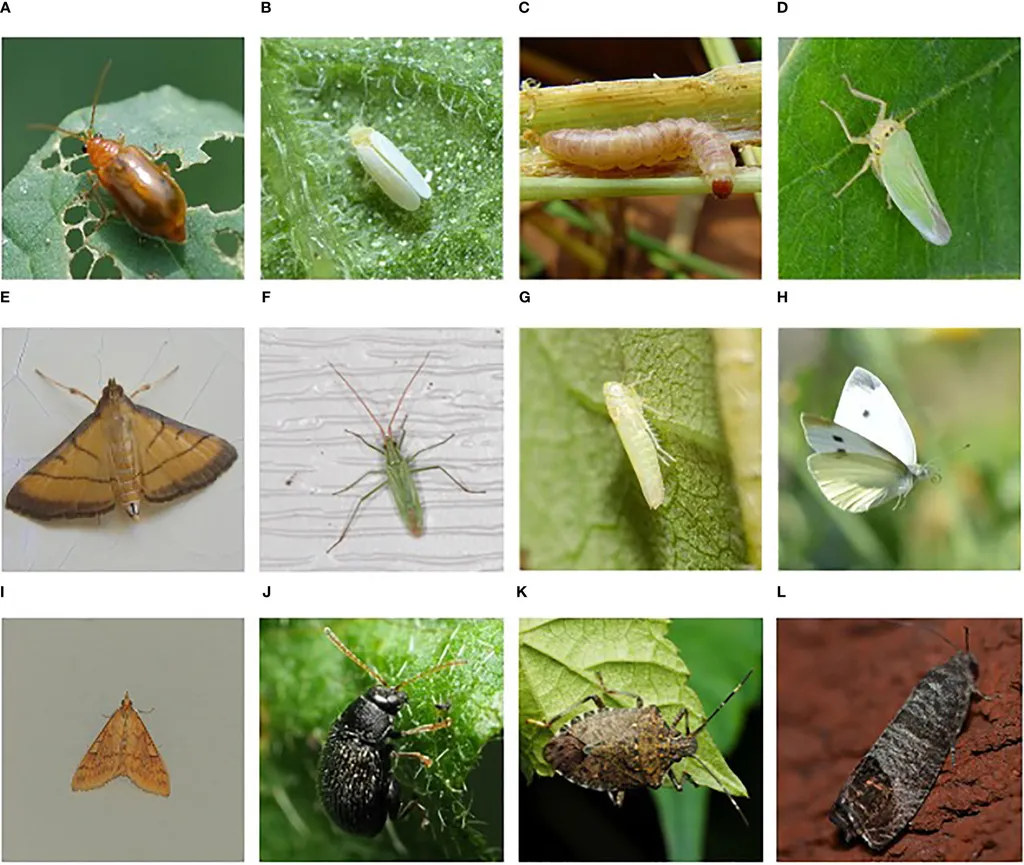

The study constructed a multimodal dataset comprising 60 pest and predator categories, complete with annotated images and semantic descriptions. The results are impressive: the framework achieved a precision of 90.4%, a recall of 86.7%, and an F1-score of 88.5% in image classification. In detection tasks, it attained a precision of 91.6% and a mean average precision (mAP@50) of 86.3%. For hierarchical reasoning and knowledge-enhanced tasks, the F1-scores reached 88.5% and 89.7%, respectively.

These findings have significant implications for the agricultural sector. Accurate and efficient pest and predator recognition can lead to more targeted and effective pest control strategies, reducing the need for broad-spectrum pesticides and promoting more sustainable farming practices. This not only benefits the environment but also has economic advantages, as farmers can minimize crop losses and optimize resource use.

Moreover, the framework’s ability to extract structured, semantically aligned image features under real-world sensor conditions opens up new possibilities for intelligent agricultural monitoring. “This technology can be integrated into existing sensor systems, providing farmers with real-time, actionable insights,” says Sun. “It’s a game-changer for precision agriculture.”

The commercial impacts of this research are far-reaching. Companies involved in agricultural technology, sensor manufacturing, and data analytics can leverage this framework to develop more advanced monitoring solutions. The energy sector, too, can benefit from the efficient use of resources and the promotion of sustainable practices, aligning with global efforts to combat climate change and promote environmental stewardship.

As we look to the future, this research paves the way for more sophisticated and interpretable approaches to ecological modeling and monitoring. By integrating ecological knowledge with advanced computer vision techniques, we can unlock new levels of understanding and control over our agricultural systems. The work of Yiqiang Sun and his team is a testament to the power of interdisciplinary research and its potential to drive innovation in the agricultural sector.