In the relentless pursuit of safeguarding global agriculture, a groundbreaking framework has emerged, promising to revolutionize the way we detect and manage plant diseases. Researchers have developed an advanced deep learning model that achieves unprecedented accuracy in identifying tomato diseases, offering a beacon of hope for farmers and agritech enthusiasts alike.

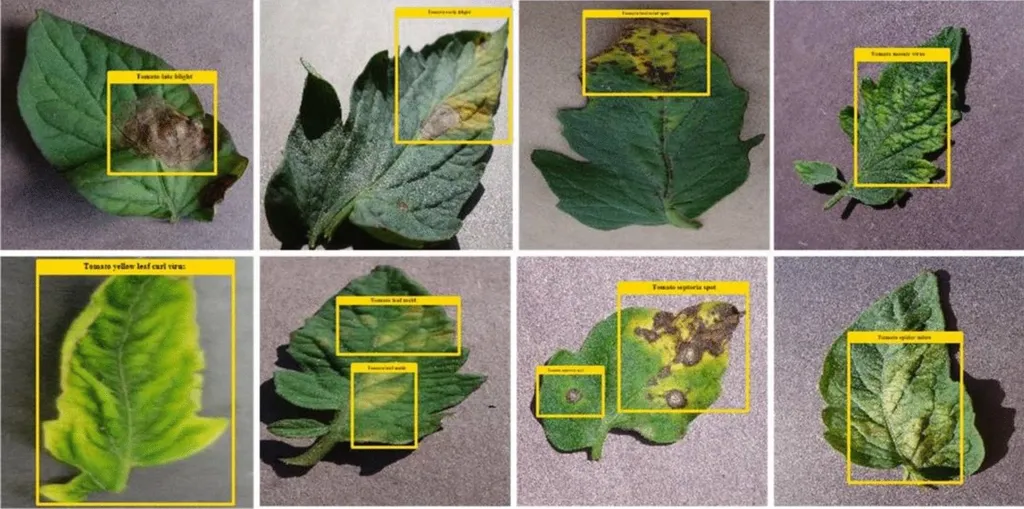

The novel framework, detailed in a recent study published in *PeerJ Computer Science*, leverages the cutting-edge You Only Look Once (YOLO)v11 architecture, enhanced with an Attention-Guided Multi-Scale Feature Fusion (AGMS-FF) Enhancer. This sophisticated model is designed to classify 10 distinct diseases affecting tomato plants, alongside healthy specimens, with remarkable precision.

“Our enhanced YOLOv11 model achieved an outstanding overall accuracy of 99.93%,” said lead author Entesar Hamed I. Eliwa from the Department of Mathematics and Statistics at King Faisal University. “This level of accuracy is a game-changer for the agriculture sector, providing a robust and reliable tool for real-time disease detection.”

The AGMS-FF module refines feature representations by integrating multi-scale convolutional paths with both channel and spatial attention mechanisms, supported by residual connections to improve feature learning and model stability. This meticulous approach ensures that the model not only performs exceptionally well but also maintains its robustness in real-world agricultural settings.

The framework was rigorously evaluated on the extensive Zekeriya Tomato Disease Model dataset, comprising 42,606 annotated images. The results were impressive, with many disease classes reaching perfect 100.00% precision, recall, and F1-scores. A comprehensive ablation study confirmed the efficacy of the AGMS-FF components, demonstrating that the enhanced variants maintained high levels of performance even at performance saturation points.

Beyond its exceptional accuracy, the model exhibited excellent computational efficiency. With a training duration of 126 minutes, inference time of 31.4 milliseconds, memory usage of 3.2 GB, and a throughput of 38.2 FPS, this framework is not only powerful but also practical for real-time diagnostic capabilities.

The implications for the agriculture sector are profound. “This research significantly bridges the gap between advanced deep-learning research and practical agricultural deployment,” Eliwa explained. “It offers real-time diagnostic capabilities essential for enhancing crop health, optimizing yields, and bolstering global food security.”

The commercial impacts of this research are vast. Farmers can now leverage this technology to monitor their crops more effectively, reducing the risk of significant losses due to disease. Agritech companies can integrate this model into their existing systems, providing farmers with cutting-edge tools to manage crop health proactively. Additionally, the framework’s efficiency and accuracy make it a valuable asset for agricultural research and development, paving the way for future innovations in plant disease management.

As we look to the future, this research sets a new standard for tomato disease classification and offers a glimpse into the potential of deep learning in agriculture. The enhanced YOLOv11 model not only addresses the urgent need for accurate and efficient disease detection but also underscores the transformative potential of advanced technologies in safeguarding our food supply. With continued advancements in this field, we can expect to see even more innovative solutions that will shape the future of agriculture and food security.