In the face of increasingly erratic climate conditions, farmers are grappling with significant challenges in maintaining both the yield and quality of their crops. Sweet potato growers, in particular, have felt the strain, but a recent study published in *Agricultural Water Management* offers a glimmer of hope. Researchers, led by Soo Been Cho from the Department of Biosystems Engineering at Gyeongsang National University in South Korea, have developed a cost-effective monitoring system that uses standard RGB imagery and deep-learning models to detect water stress in sweet potatoes.

The study addresses a critical gap in the market: the high cost and operational challenges of hyperspectral or multispectral imagery, which are currently the go-to tools for large-scale agricultural monitoring. “While these methods are effective, they are often prohibitively expensive for many farmers,” Cho explains. “Our goal was to create a more accessible solution that could be easily integrated into existing farming practices.”

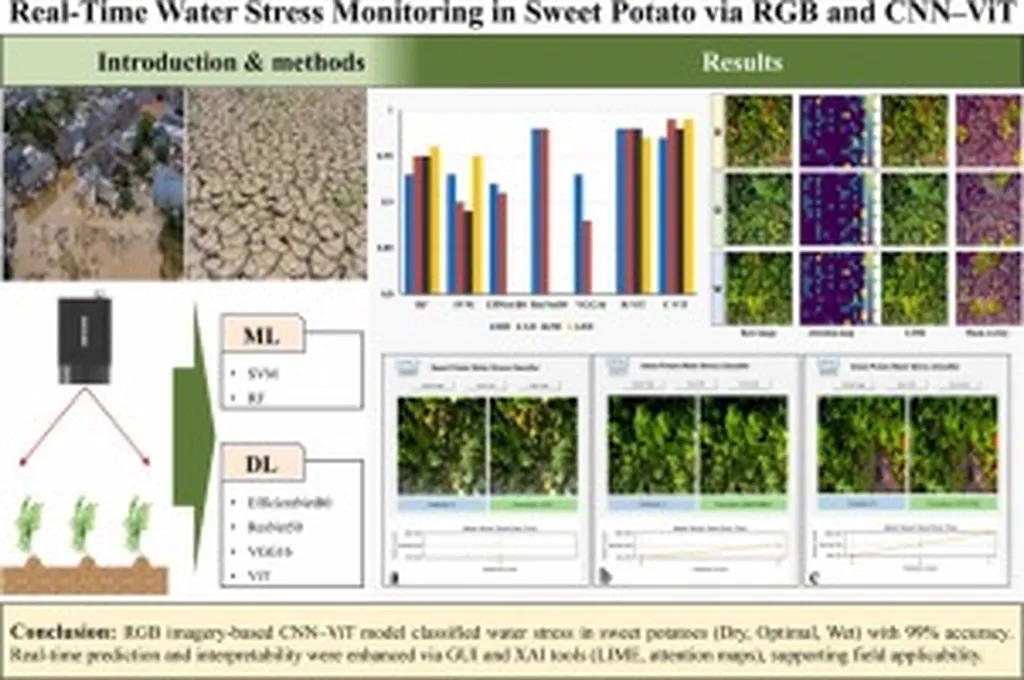

The team developed a Convolutional Neural Network (CNN) model as the base and then created several hybrid models by combining the CNN with Random Forest (RF), Support Vector Machine (SVM), and Vision Transformer (ViT). The CNN-ViT hybrid model emerged as the top performer, achieving an impressive accuracy of 0.99. This model not only detects water stress but also provides interpretable results through feature visualization, making it a practical diagnostic tool for agricultural field monitoring.

The implications for the agriculture sector are substantial. By providing a cost-effective and accurate method for monitoring water stress, this technology can help farmers make more informed decisions about irrigation and resource management. “This system has the potential to revolutionize how we approach agricultural monitoring,” Cho notes. “It could lead to more efficient water use, higher yields, and ultimately, greater profitability for farmers.”

The study also highlights the potential for future developments in the field. As deep-learning models continue to evolve, the integration of these technologies with standard RGB imagery could become even more sophisticated. This could open up new avenues for monitoring a wide range of crop health indicators, not just water stress.

For now, the focus is on refining the technology and making it more accessible to farmers. The research team is optimistic about the future and the impact this technology can have on the agriculture sector. “We believe that this is just the beginning,” Cho says. “With further development and integration, we can create even more powerful tools to support farmers and ensure sustainable agricultural practices.”

As the agriculture sector continues to face the challenges posed by climate change, innovations like this offer a beacon of hope. By leveraging the power of deep learning and standard RGB imagery, farmers can better monitor their crops and make data-driven decisions that enhance productivity and sustainability. The study, led by Soo Been Cho from the Department of Biosystems Engineering at Gyeongsang National University, represents a significant step forward in the quest for more efficient and effective agricultural monitoring solutions.