In the rapidly evolving world of unmanned aerial vehicles (UAVs), a groundbreaking development has emerged that could revolutionize how we detect small objects in aerial images. Researchers have introduced the Contextual-Semantic Interactive Perception Network (CSIPN), a novel approach designed to enhance small object detection in UAV aerial scenarios. This innovation holds significant promise for various sectors, including agriculture, logistics, public security, and disaster response.

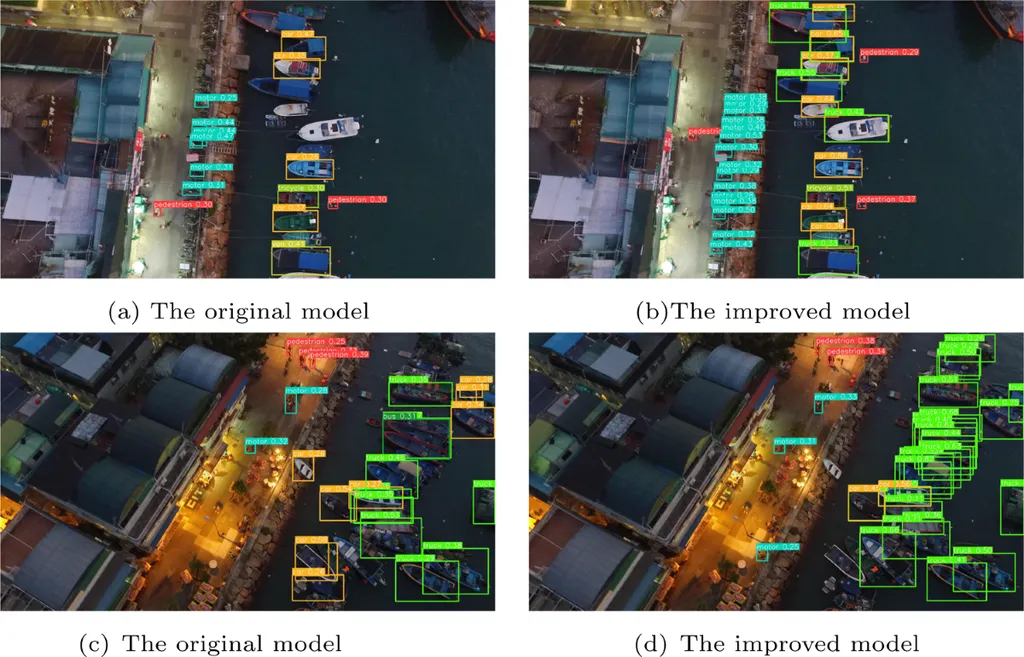

The challenge of detecting small objects in UAV aerial images has long been a hurdle for researchers and practitioners alike. The complex backgrounds often overwhelm small objects, making them difficult to distinguish and prone to being missed by detectors. The CSIPN addresses these issues through a combination of scene interaction modeling, dynamic context modeling, and dynamic feature fusion. This multi-faceted approach ensures that small objects are not lost in the intricate details of the background.

The core components of the CSIPN include the Scene Interaction Modeling Module (SIMM), the Dynamic Context Modeling Module (DCMM), and the Semantic-Context Dynamic Fusion Module (SCDFM). The SIMM employs a lightweight self-attention mechanism to generate a global scene semantic embedding vector, which interacts with shallow spatial descriptors to highlight key spatial responses. “This interaction helps in selectively activating the most relevant spatial information, making small objects more discernible,” explains lead author Yiming Xu.

The DCMM uses two dynamically adjustable receptive-field branches to adaptively model contextual cues, ensuring that the necessary contextual information for detecting various small objects is effectively supplemented. Meanwhile, the SCDFM utilizes a dual-weighting strategy to dynamically fuse deep semantic information with shallow contextual details, emphasizing features relevant to small object detection while suppressing irrelevant background noise.

The results of this research are impressive. The CSIPN achieves mean Average Precision (mAP) scores of 37.2% on the TinyPerson dataset, 93.4% on the WAID dataset, 50.8% on the VisDrone-DET dataset, and 48.3% on the self-built WildDrone dataset, all while using only 25.3M parameters. These figures surpass existing state-of-the-art detectors, demonstrating the superiority and robustness of the CSIPN.

For the agriculture sector, the implications are profound. Accurate detection of small objects from aerial images can significantly enhance precision farming practices. Farmers can monitor crop health, identify pests, and manage resources more effectively. “This technology can transform how we approach agricultural monitoring, making it more efficient and precise,” says Yiming Xu.

The commercial impact of this research extends beyond agriculture. In logistics, UAVs equipped with CSIPN can improve inventory management and tracking. In public security, they can enhance surveillance and monitoring capabilities. Disaster response teams can also benefit from more accurate and timely detection of small objects in affected areas.

Looking ahead, this research could shape future developments in UAV technology and small object detection. The integration of advanced modeling techniques and dynamic feature fusion opens new avenues for innovation. As Yiming Xu notes, “The potential applications are vast, and we are excited to explore how this technology can be further refined and applied in various fields.”

Published in the journal ‘Remote Sensing’ and led by Yiming Xu from the Xi’an Key Laboratory of Intelligent Spectrum Sensing and Information Fusion at Xidian University, this research marks a significant step forward in the field of UAV-based object detection. As the technology continues to evolve, we can expect even more sophisticated and efficient solutions to emerge, driving progress across multiple industries.