In the heart of Indonesia, researchers have brewed up a novel approach to tackle a longstanding challenge in coffee production: accurately detecting and classifying coffee cherries based on their maturity levels. This task, often daunting due to the high visual similarity among cherries within a single bunch, has been revolutionized by a hybrid framework that combines the power of YOLOv8 and Vision Transformer (ViT). The study, published in the *Journal of Applied Informatics and Computing*, offers a promising solution that could reshape the agricultural sector’s approach to image-based classification.

The lead author, Ahmad Subki from Universitas Teknologi Mataram, explains, “Our goal was to develop a system that could automatically and accurately classify coffee cherries, even when they look very similar. By integrating YOLOv8 for initial detection and ViT for enhanced classification, we’ve significantly improved the accuracy of this process.”

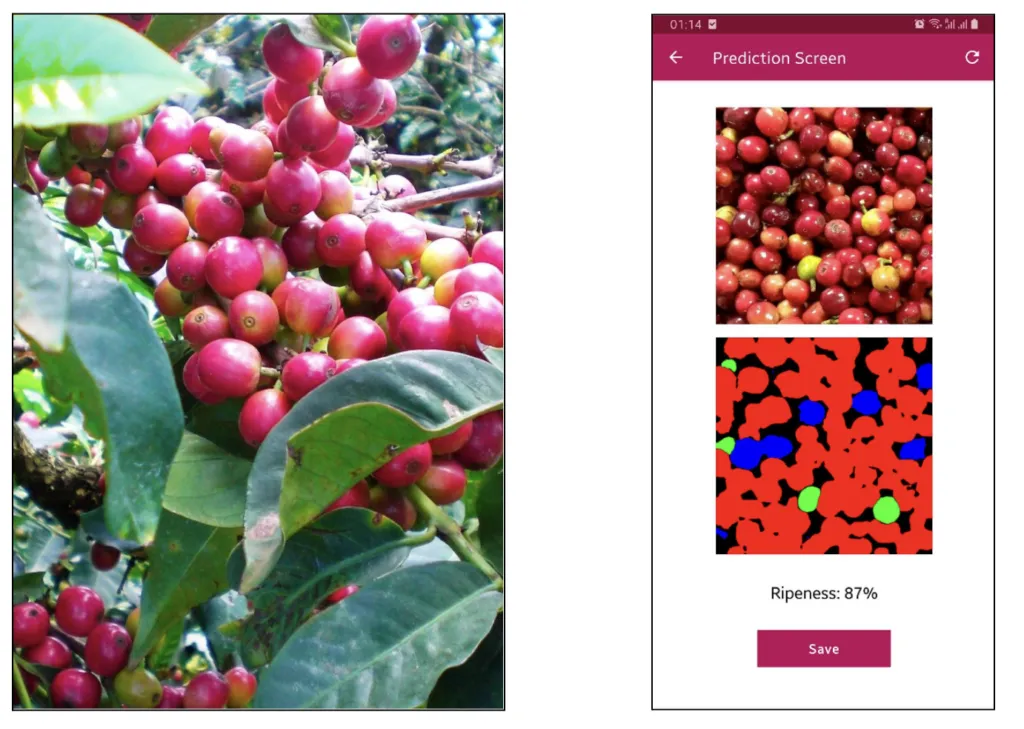

The hybrid framework works in two stages. First, YOLOv8 identifies and crops coffee cherry objects from bunch images. These cropped images are then re-classified using the Vision Transformer, which fine-tunes the predictions and boosts accuracy. The training process involved a learning rate of 0.0001, a batch size of 16, and epoch variations of 50, 100, and 150. The results were impressive: at 100 epochs, the YOLOv8+ViT model achieved an accuracy of 89.52%, outperforming the standalone YOLOv8 model, which only reached an accuracy of 75.44%.

This breakthrough has significant commercial implications for the agriculture sector. Accurate classification of coffee cherries based on maturity levels can streamline harvesting processes, reduce waste, and improve the overall quality of coffee beans. As Subki notes, “This technology can be a game-changer for coffee producers, enabling them to optimize their operations and enhance the quality of their products.”

The integration of YOLOv8 and ViT not only improves classification accuracy but also offers a flexible and adaptable solution for other domains involving complex visual objects. This hybrid approach could pave the way for future developments in agricultural technology, making it easier to manage and process crops with high visual similarity.

As the agricultural sector continues to embrace technological advancements, the work of Subki and his team serves as a testament to the potential of computer vision and machine learning in transforming traditional practices. The study, published in the *Journal of Applied Informatics and Computing*, underscores the importance of interdisciplinary research in driving innovation and progress in the field of agriculture.