In the heart of China’s Guangdong Province, a groundbreaking approach to crop classification is taking root, promising to revolutionize smart agriculture and bolster the sector’s resilience against unpredictable weather. Researchers from the College of Natural Resources and Environment at South China Agricultural University have developed a novel model that combines the best of spatial and temporal data analysis, offering a significant leap forward in precision agriculture.

The challenge at hand is a familiar one for farmers in cloudy and rainy regions: traditional optical remote sensing methods often fall short due to weather interference. Enter synthetic aperture radar (SAR) data, which operates independently of weather conditions and is highly sensitive to crop phenology. However, existing classification algorithms have typically relied on either temporal or spatial features alone, leaving valuable data untapped.

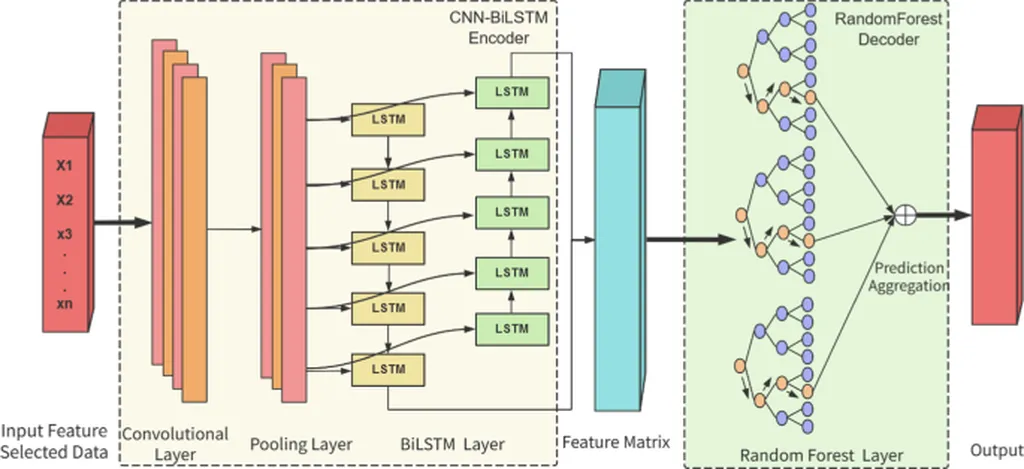

Enter Jie He and colleagues, who have proposed a CNN-BiLSTM model that merges the strengths of convolutional neural networks (CNN) for spatial feature extraction with bidirectional long short-term memory (BiLSTM) networks to capture long-term temporal dependencies. “Our approach achieves deep spatiotemporal coupling of time-series SAR data, constructing a more comprehensive feature representation for crop classification,” He explains. This integration allows for a more nuanced and accurate understanding of crop health and growth patterns.

The model’s efficacy was put to the test using nine Sentinel-1A images from the main growing season in Leizhou City. The results were impressive, with the CNN-BiLSTM model outperforming traditional methods like support vector machine, CNN, and BiLSTM. The model achieved an overall accuracy of 95.8% and a Kappa coefficient of 0.94, setting a new benchmark for crop classification.

The commercial implications for the agriculture sector are substantial. Accurate and efficient crop classification is fundamental to smart agriculture, enabling precision monitoring and management. This technology can help farmers optimize resource use, improve yield predictions, and enhance overall farm management. “This approach provides a novel solution for crop classification in cloudy and rainy regions,” He notes, highlighting its potential to transform agriculture in areas where weather conditions have historically posed challenges.

Looking ahead, this research could shape future developments in the field by encouraging the integration of advanced machine learning techniques with remote sensing data. The success of the CNN-BiLSTM model underscores the potential of deep learning in agriculture, paving the way for more sophisticated and accurate monitoring systems. As the agriculture sector continues to embrace technology, innovations like this will be crucial in driving efficiency, sustainability, and productivity.

The study was published in the IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, underscoring its significance in the field of remote sensing and agriculture. With its promising results and wide-ranging applications, this research marks a significant step forward in the quest for smarter, more resilient agriculture.