In the ever-evolving landscape of agricultural technology, a groundbreaking development has emerged that promises to revolutionize pest detection in cowpea crops. Researchers have introduced MRLCM-YOLO, a lightweight yet highly accurate object detection model designed to tackle the complex challenges of pest detection in the field. This innovation, published in *Smart Agricultural Technology*, could significantly impact the agriculture sector by enhancing crop yield and quality.

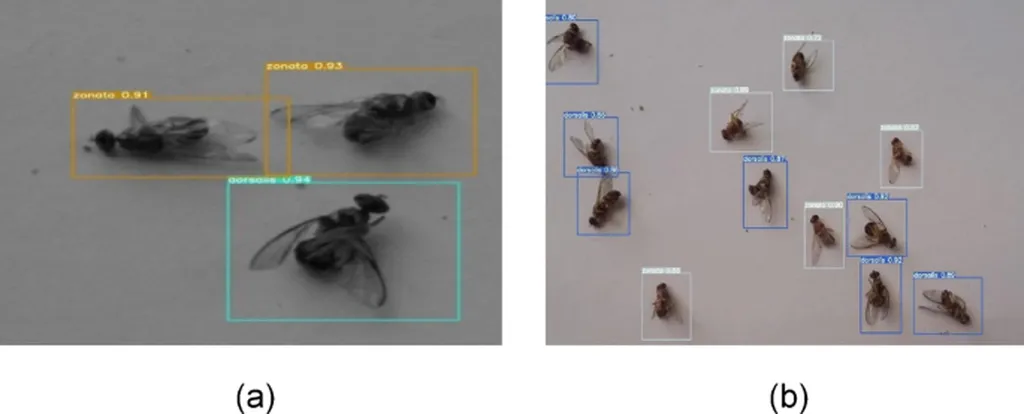

The study, led by Chunshan Wang from the School of Information Science and Technology at Hebei Agricultural University, addresses critical issues such as dense small object detection, complex field environments, class imbalance, and resource limitations. The MRLCM-YOLO model, built on the YOLOv11 framework, incorporates several advanced features to improve its performance. Notably, it uses RepViT, a reparameterized vision transformer, to boost both feature expressiveness and inference speed. Additionally, the model introduces a novel feature fusion mechanism called CGRFPN and employs the LSKM module to enhance attention on target regions. A decoupled detection head, MultiSEAMHead, further improves model robustness by separating classification and localization tasks.

The implications for the agriculture sector are substantial. Effective pest detection is crucial for maintaining crop health and ensuring high yields. Traditional methods of pest detection often fall short in complex and cluttered field environments, leading to potential losses in both yield and quality. MRLCM-YOLO’s ability to accurately identify pests in such challenging conditions offers a significant advantage. “This model represents a major leap forward in agricultural technology,” said Wang. “Its lightweight nature and high accuracy make it an ideal solution for real-world deployment, where resources are often limited.”

The model’s performance is impressive, achieving 87.9% mAP50 and 57.2% mAP50–95, surpassing the baseline YOLOv11 model by 0.5% and 0.9%, respectively. With just 9.1 million parameters, MRLCM-YOLO strikes an effective balance between detection performance and computational cost. This efficiency is particularly valuable for farmers and agricultural businesses, as it allows for cost-effective and scalable implementation.

The research also highlights the importance of high-resolution datasets in training and validating such models. The cowpea pest dataset used in this study comprises 3855 annotated images spanning 19 pest categories, providing a robust foundation for the model’s development. This emphasis on data quality underscores the need for comprehensive and accurate datasets in agricultural research.

Looking ahead, the development of MRLCM-YOLO could pave the way for further advancements in pest detection and agricultural technology. As the model is refined and adapted for other crops, it has the potential to become a standard tool in precision agriculture. The integration of advanced deep learning techniques with practical agricultural applications could lead to more sustainable and efficient farming practices, ultimately benefiting both farmers and consumers.

In conclusion, the introduction of MRLCM-YOLO marks a significant milestone in the field of agricultural technology. Its ability to accurately detect pests in complex environments, coupled with its lightweight and efficient design, positions it as a valuable tool for the agriculture sector. As researchers continue to explore and expand its applications, the future of pest detection in agriculture looks increasingly promising.