In the heart of Karnataka, Mysuru Taluk is undergoing significant transformations, and a groundbreaking study is shedding light on these changes with unprecedented accuracy. Researchers, led by H.N. Mahendra from the Department of Electronics and Communication Engineering at JSS Academy of Technical Education, have employed Vision Transformers (ViTs) to analyze land use and land cover (LULC) changes over two decades, from 2004 to 2024. This innovative approach, detailed in the journal *Applied Computing and Geosciences*, is not just about mapping changes; it’s about revolutionizing how we understand and manage our land resources.

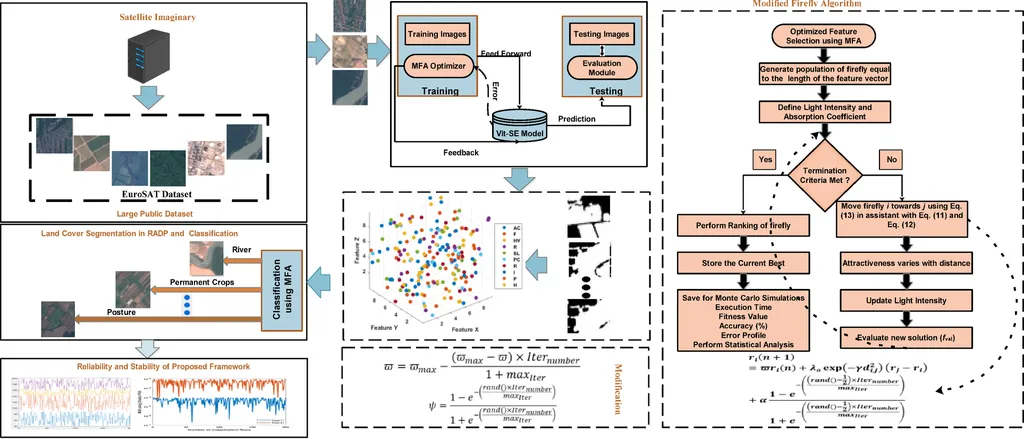

Traditional methods of LULC mapping have long struggled with the complexities of multi-temporal satellite data. Variability in land features and temporal changes often lead to inaccuracies, making it challenging to track subtle shifts over time. Enter Vision Transformers, a cutting-edge deep learning model that leverages self-attention mechanisms to capture long-range dependencies in data. “By employing ViTs, we aimed to overcome these limitations,” Mahendra explains. “Their ability to capture intricate patterns and relationships in the data offers a more refined classification process, ultimately enhancing our understanding of land use dynamics.”

The results speak for themselves. The study achieved impressive classification accuracies of 95.07% in 2004, 95.79% in 2014, and a remarkable 96.74% in 2024. These figures not only highlight the efficiency of ViTs but also underscore their potential to transform the field of remote sensing. Change detection analysis revealed that built-up areas in Mysuru Taluk increased by 17.25%, while agricultural land decreased by 16.24% over two decades. These insights are invaluable for policymakers and urban planners, providing a data-driven foundation for strategies that balance urbanization with environmental sustainability.

For the agriculture sector, the implications are profound. Accurate LULC mapping is crucial for monitoring crop health, managing resources, and planning sustainable practices. “Understanding land use changes is fundamental to agriculture,” Mahendra notes. “It helps us anticipate challenges and adapt strategies to ensure food security and environmental conservation.” By providing precise and reliable data, ViTs can support farmers and agribusinesses in making informed decisions, ultimately enhancing productivity and sustainability.

The study’s findings also open up new avenues for future research. As Mahendra points out, “The success of ViTs in this context suggests that similar models could be applied to other regions and datasets, further advancing our understanding of global land use dynamics.” This could lead to the development of more sophisticated tools for monitoring and managing land resources, benefiting not just agriculture but also forestry, urban planning, and environmental conservation.

In the rapidly evolving field of agritech, the integration of advanced technologies like Vision Transformers represents a significant leap forward. As we continue to grapple with the challenges of climate change, urbanization, and resource management, such innovations offer hope for a more sustainable and resilient future. The research published in *Applied Computing and Geosciences* is a testament to the power of technology in transforming our approach to land use and land cover analysis, paving the way for smarter, more informed decision-making in the agriculture sector and beyond.