In the ever-evolving landscape of precision agriculture, a novel approach to tea leaf disease classification has emerged, promising to bolster productivity and economic gains for tea producers worldwide. Researchers have developed a hybrid deep learning model that combines the power of convolutional neural networks (CNNs) with traditional image processing techniques, offering a significant leap forward in disease detection accuracy.

The study, led by Wiranata Ade Davy from the Informatics Engineering Study Program at Universitas Muhammadiyah Prof. Dr. HAMKA, introduces a model that integrates MobileNetV2—a popular CNN architecture—with features derived from the CIELAB color space and Gray Level Co-occurrence Matrix (GLCM) texture descriptors. This fusion of visual and statistical features has resulted in an impressive 86% accuracy in classifying tea leaf diseases, outperforming the baseline MobileNetV2 model by six percentage points.

The economic implications of this research are substantial. Tea leaf diseases can severely impact the quality and yield of tea plants, leading to considerable financial losses for farmers and the agricultural sector as a whole. By enabling early and accurate disease detection, this hybrid model can help mitigate these losses, ensuring better crop management and improved productivity.

“Our model’s enhanced accuracy can translate to timely interventions, reducing crop losses and improving the overall quality of tea production,” Davy explained. “This is particularly crucial for small-scale farmers who may not have access to advanced diagnostic tools.”

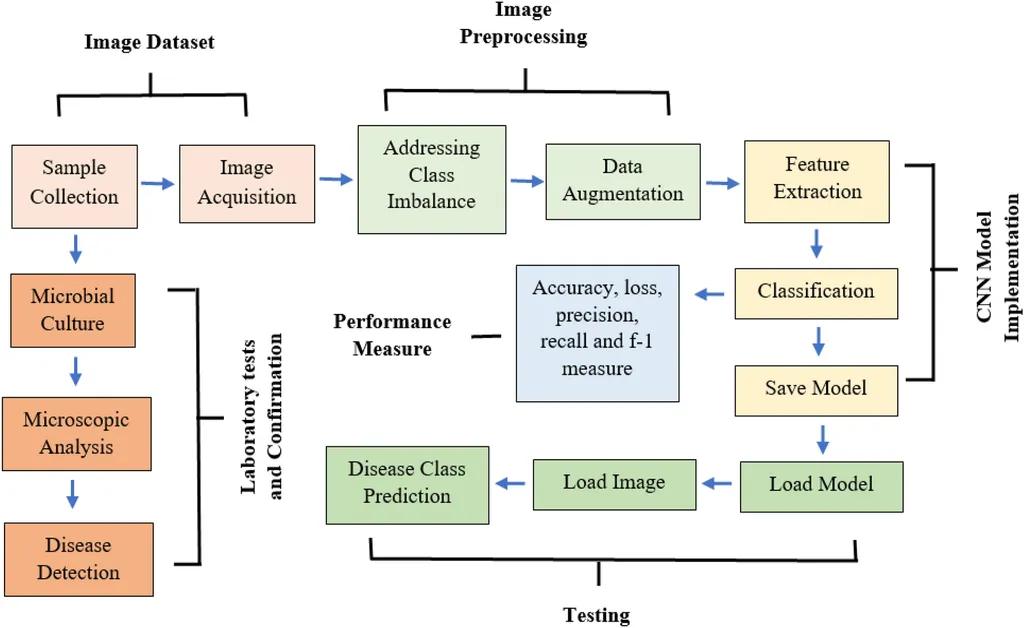

The study utilized the Tea Sickness Dataset, comprising eight classes of diseased and healthy leaves, with approximately one hundred images per class. The images were preprocessed through color conversion, normalization, and moderate augmentation to enhance the dataset’s diversity. GLCM texture features were extracted from the luminance channel at four orientations, resulting in twenty statistical features that were fused with CNN features to improve classification performance.

While the results are promising, the researchers acknowledge challenges such as the relatively small dataset size and potential class imbalance, which may limit the model’s generalization to real-world scenarios. To address these issues, future work will focus on larger and more balanced datasets, alternative fusion strategies, and advanced architectures like attention mechanisms and explainable AI.

“This research opens up new avenues for integrating traditional image processing techniques with deep learning models,” Davy noted. “It’s a step towards more robust and reliable disease detection systems in precision agriculture.”

The study, published in the BIO Web of Conferences, underscores the potential of hybrid models in revolutionizing agricultural practices. As the field of precision agriculture continues to evolve, such innovations could pave the way for smarter, more efficient farming techniques, ultimately benefiting both producers and consumers. The research not only highlights the importance of interdisciplinary approaches but also sets the stage for future developments in AI-driven agricultural solutions.