In the ever-evolving landscape of precision agriculture, the quest for efficient and accurate fruit detection has taken a significant leap forward. Researchers have benchmarked and optimized YOLO (You Only Look Once) architectures to enhance kiwi detection, a breakthrough that could reshape how farmers monitor and manage their crops. This study, published in *Scientific Reports*, offers a promising solution for the agricultural sector, particularly for crops like kiwifruit that are densely packed and often occluded.

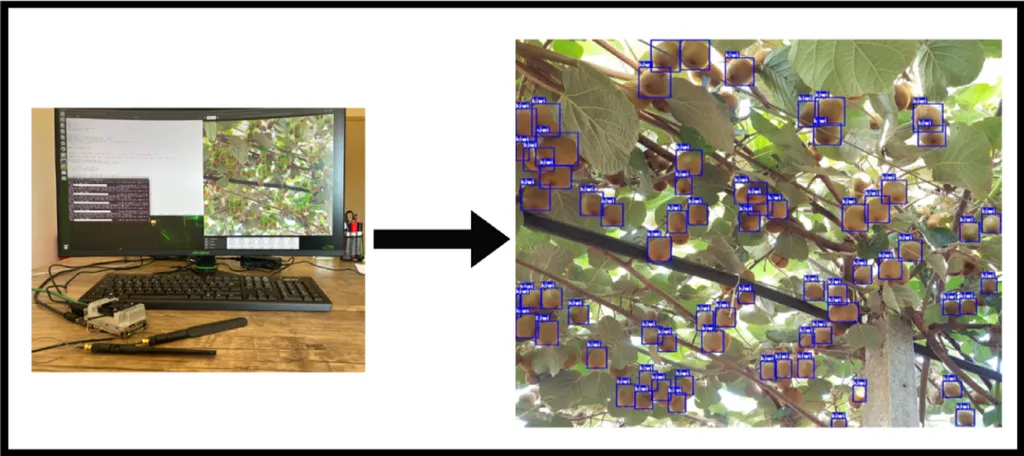

The research, led by Berat Karacaoglu from the Department of Electrical and Electronics Engineering at Yozgat Bozok University, evaluated various YOLO architectures—from YOLOv8 to YOLOv11—under both high-performance training conditions and embedded deployment on an NVIDIA Jetson TX2. The team trained five sub-models (n, s, m, l, x) per YOLO version using a field-collected dataset containing 2,925 training and 1,936 test annotations. The goal was to identify the most efficient model for edge deployment, where computational resources are limited.

One of the key findings was the substantial performance gains achieved through a structured hyperparameter optimization procedure applied to all “s” models. This optimization did not require additional architectural modifications, making it a practical and scalable solution for real-world applications. Among all evaluated models, the optimized YOLOv11s emerged as the top performer, achieving an impressive mean Average Precision ([email protected]) of 0.956, with precision at 0.868 and recall at 0.918. Importantly, it maintained an embedded inference time of just 3.33 seconds per image on the Jetson TX2.

“Our results demonstrate that lightweight YOLO architectures, when supported by targeted hyperparameter tuning, can be effectively adapted for resource-constrained agricultural systems,” Karacaoglu explained. This is a significant development, as larger models, while achieving slightly higher raw accuracies, often come with latency issues that render them unsuitable for edge deployment.

The commercial implications of this research are profound. Precision agriculture relies heavily on real-time data to optimize yield and reduce waste. Accurate and efficient fruit detection can help farmers make informed decisions about harvesting, pest control, and resource allocation. For kiwi farmers, this means the potential to monitor crop health more effectively, predict yields more accurately, and ultimately increase profitability.

Beyond kiwi detection, the proposed evaluation and optimization pipeline offers a transferable methodology for other fruit-detection tasks. This could pave the way for similar advancements in the detection of apples, oranges, and other crops, further broadening the impact of precision agriculture.

As the agricultural sector continues to embrace technology, research like this highlights the importance of developing solutions that are both accurate and efficient. The optimized YOLOv11s model represents a step forward in this direction, offering a balanced approach that prioritizes performance without compromising on resource constraints.

In the words of Karacaoglu, “This study provides a robust framework for future developments in embedded vision solutions for precision agriculture.” As the field continues to evolve, such innovations will be crucial in meeting the growing demands of sustainable and efficient farming practices.