In the ever-evolving landscape of precision agriculture, a groundbreaking study published in *Artificial Intelligence in Agriculture* is set to revolutionize how farmers and breeders monitor maize development. Led by Jibo Yue from the College of Information and Management Science at Henan Agricultural University, the research introduces a novel multi-view sensing strategy that combines the capabilities of unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) to capture comprehensive data on maize phenological stages.

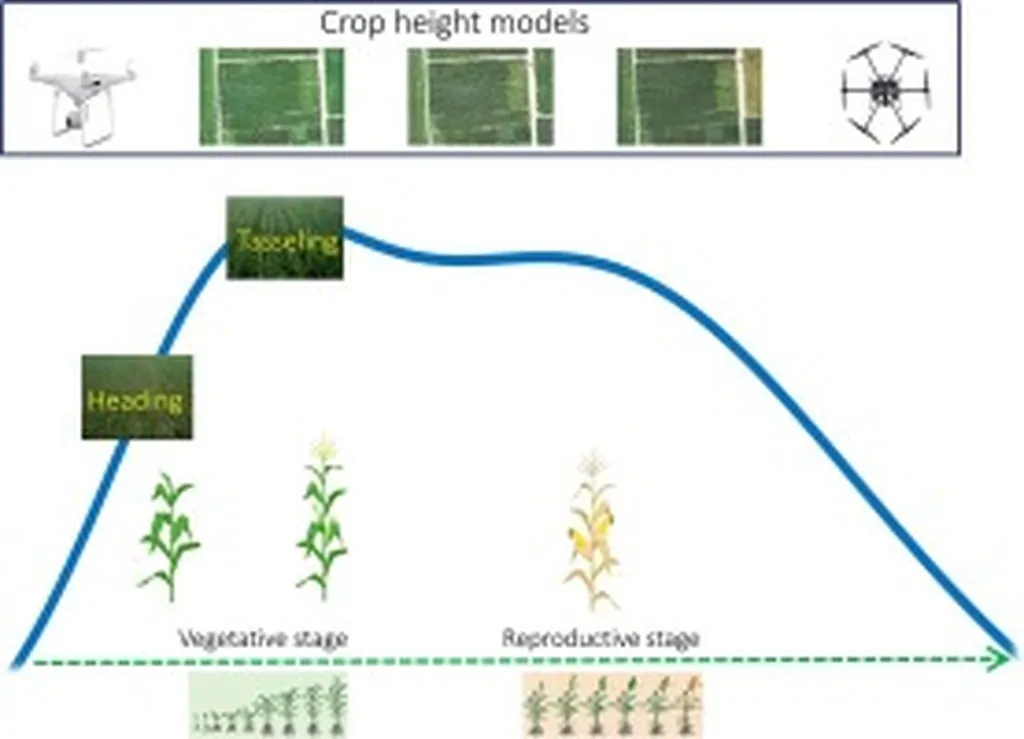

Maize phenology—marked by critical events such as germination, leaf emergence, flowering, and senescence—is a vital indicator of crop health and development. Accurate monitoring of these stages is essential for optimizing crop breeding and management practices. Yue’s study addresses this need by integrating data from multiple perspectives, including top-down and internal-horizontal views, to create a dynamic, high-throughput phenotyping system.

The research employs a multi-modal deep learning framework called MSRNet (maize-phenological stages recognition network), which fuses physiological features from both UAV and UGV sensors. These features include canopy height, vegetation indices, top-of-canopy (TOC) maize leaf images, and inside-of-canopy (IOC) maize cob images. By leveraging these diverse data streams, MSRNet achieves an impressive 84.5% overall accuracy across 12 phenological stages (V2–R6), outperforming conventional machine learning and single-modality deep learning models by a significant margin.

One of the most intriguing aspects of this research is its ability to dynamically shift focus between different plant features as the maize progresses through its growth stages. Grad-CAM visualizations revealed that MSRNet automatically prioritizes top-of-canopy leaves during vegetative growth and transitions to inside-of-canopy reproductive organs during grain filling. This adaptive feature selection not only enhances accuracy but also provides a more nuanced understanding of crop development.

“The integration of UAV and UGV data allows us to capture a more holistic view of the maize canopy, which is crucial for precision agriculture,” said Yue. “This approach not only improves the accuracy of phenological stage recognition but also offers a scalable solution for large-scale crop monitoring.”

The commercial implications of this research are substantial. By providing breeders and farmers with a more precise and efficient tool for monitoring maize development, this technology can lead to better-informed decisions regarding irrigation, fertilization, and pest management. Additionally, the high-throughput nature of the system makes it suitable for large-scale agricultural operations, potentially increasing crop yields and reducing resource waste.

As the agriculture sector continues to embrace digital transformation, the integration of multi-modal sensing and deep learning is poised to play a pivotal role in shaping the future of crop breeding and management. Yue’s research not only sets a new benchmark for maize phenotyping but also paves the way for similar advancements in other crops. With the growing demand for sustainable and efficient agricultural practices, this technology could become a cornerstone of modern farming.

Published in *Artificial Intelligence in Agriculture* and led by Jibo Yue from the College of Information and Management Science at Henan Agricultural University, this study underscores the transformative potential of combining cutting-edge technology with agricultural science. As the field continues to evolve, the insights gained from this research will undoubtedly inspire further innovation and drive the agriculture sector toward a more data-driven future.