In the relentless battle against crop-destroying pests, a team of researchers has developed a cutting-edge tool that could revolutionize how farmers monitor and protect their maize crops. Published in *Scientific Reports*, the study introduces a deep learning framework that combines visible (RGB) and thermal images to detect Fall Army Worm (FAW) infestations with remarkable accuracy. This innovation could significantly reduce crop losses and enhance decision-making in precision agriculture, offering a much-needed boost to the agricultural sector.

The Fall Army Worm, a notorious pest known for its rapid spread and devastating impact on maize yields, has long been a thorn in the side of farmers worldwide. Traditional detection methods, which often rely on manual scouting, are not only labor-intensive but also prone to human error. The new framework, developed by lead author Prakash Sandhya from the Department of Mathematics at the Vellore Institute of Technology, aims to address these challenges by leveraging the power of multimodal image fusion.

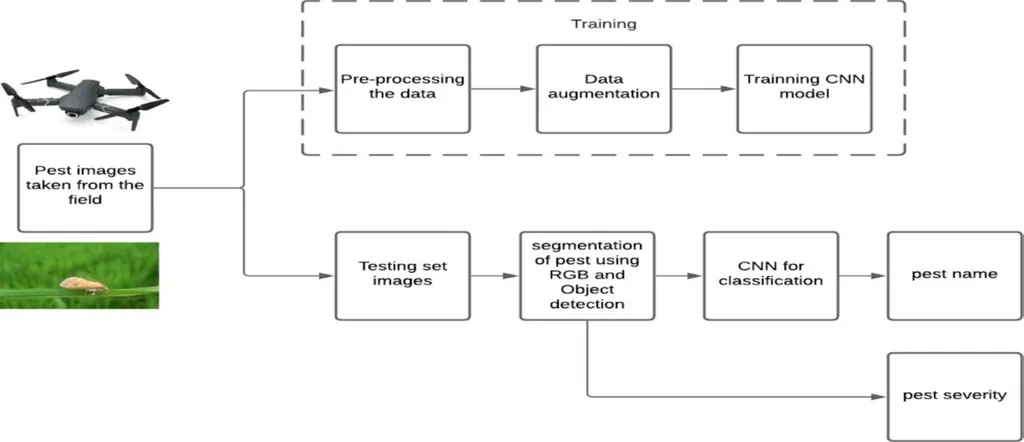

The research introduces a hybrid DNN-ViT model that integrates two complementary pipelines. The first pipeline, feature-level fusion, extracts features from both RGB and thermal images using Convolutional Neural Networks (CNNs) and then fuses these features before classification. The second pipeline, image-level fusion, processes a 6-channel RGB-thermal image directly using a modified Vision Transformer (ViT). This dual approach enhances detection accuracy, providing a robust solution for monitoring crop health.

The results are impressive. The fused model achieved an accuracy of 0.98, with precision, recall, and F1-score all at 0.98, and an AUC-ROC of 0.98 on the test set. These figures significantly outperform models trained on RGB-only, thermal-only, and unfused data. “The ablation study confirms the effectiveness of multimodal fusion,” Sandhya explains. “The no-fusion model showed significantly lower performance, with an accuracy of 0.60 and an AUC-ROC of 0.67. This highlights the benefits of integrating complementary data sources for robust crop health monitoring.”

The commercial implications of this research are substantial. By automating the detection of FAW infestations, farmers can take timely and targeted actions to mitigate crop damage, ultimately improving yields and reducing economic losses. The technology also holds promise for integration into existing precision agriculture systems, offering a scalable solution that can be deployed across large farming operations.

Looking ahead, the researchers plan to explore enhanced fusion strategies, environmental robustness, and field-level deployment to validate the model’s practical applicability. “Future research will focus on making the model more resilient to varying environmental conditions and ensuring its seamless integration into real-world farming practices,” Sandhya adds.

This groundbreaking work not only underscores the potential of deep learning in agriculture but also paves the way for future developments in crop monitoring and pest management. As the agricultural sector continues to embrace technological advancements, tools like this could become indispensable in the fight to ensure food security and sustainability.