In the ever-evolving landscape of precision agriculture, a novel framework is making waves, promising to revolutionize the way farmers assess pepper maturity. The “YOLO-AVCA-CBAMNet” framework, developed by Bipin Nair B J from the Department of Computer Science at Amrita Vishwa Vidyapeetham, Mysuru Campus, India, and published in ‘MethodsX’, integrates advanced computer vision and deep learning techniques to detect and classify green pepper maturity stages with unprecedented accuracy.

The framework operates in natural field conditions, addressing a critical challenge in spice production: ensuring optimal harvest timing. By leveraging a self-collected dataset of pepper berries captured under diverse illumination settings and background complexities, the study demonstrates the ecological validity and practical relevance of the proposed approach.

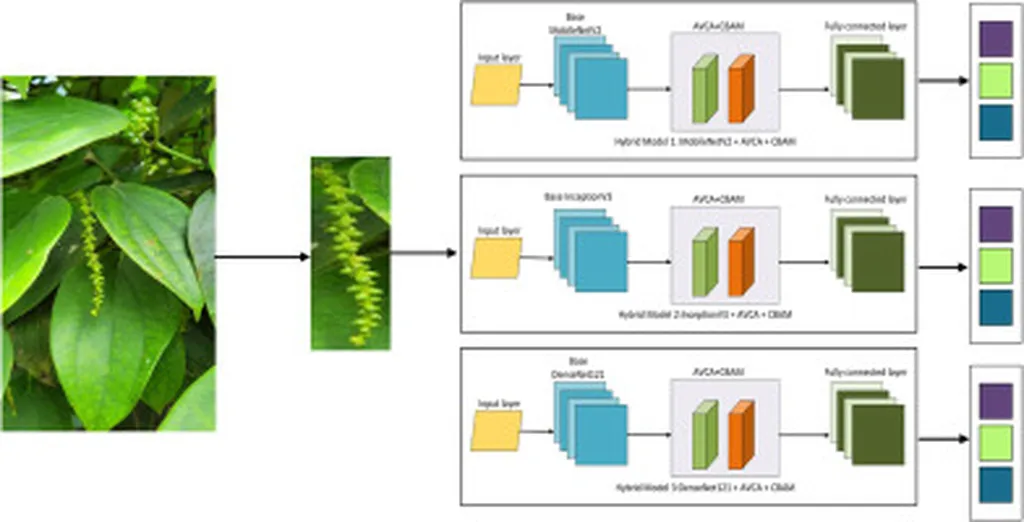

At the heart of the YOLO-AVCA-CBAMNet framework lies a dual-attention design. The Adaptive Visual Cortex Attention Module (AVCAM) strengthens global contextual weighting by adaptively recalibrating salient features, while the Convolutional Block Attention Module (CBAM) improves spatial and channel-specific discrimination through sequential attention refinement. This complementary approach enables more reliable separation of visually similar maturity stages, a significant advancement in the field of agricultural imaging.

“The integration of these attention mechanisms has allowed us to achieve accuracy gains of 5–9% across all backbone architectures,” explains Nair. “Our DenseNet121-based configuration achieved a peak accuracy of 96.19%, demonstrating the potential of attention-driven models to support interpretable, efficient, and scalable maturity assessment solutions in precision agriculture.”

The commercial impacts of this research are substantial. Accurate maturity assessment is crucial for maintaining quality standards and optimizing harvest timing, directly influencing the yield and market value of pepper crops. By providing a reliable, scalable solution, the YOLO-AVCA-CBAMNet framework can empower farmers to make data-driven decisions, ultimately enhancing productivity and profitability.

Moreover, the success of this framework opens doors for future developments in agricultural imaging. The integration of attention mechanisms in computer vision models could pave the way for more sophisticated, context-aware solutions in various agricultural applications, from disease detection to yield prediction.

As the agriculture sector continues to embrace technological advancements, the YOLO-AVCA-CBAMNet framework stands as a testament to the transformative power of deep learning and computer vision. With its proven accuracy and practical relevance, it is poised to shape the future of precision agriculture, driving efficiency and sustainability in spice production and beyond.