In the realm of agritech, where precision and efficiency are paramount, a groundbreaking development in visual speech recognition could revolutionize how farmers and agricultural workers interact with technology. Researchers have introduced a novel model termed Optimized Quaternion Charlier Moments Convolutional Neural Network (QCMs-PSO-CNN), designed to accurately recognize visual speech through lip-reading. This innovation, published in the Ain Shams Engineering Journal, holds significant promise for enhancing communication in noisy environments, a common challenge in agricultural settings.

The study, led by Omar El Ogri from the Laboratory of Sciences, Engineering and Management (LSEM) at Sidi Mohamed Ben Abdellah University and the Laboratory of Sustainable Agriculture Management (LSAM) at Chouaib Doukkali University, presents a method that optimizes the recognition of visual speech using an enhanced particle swarm optimization (PSO). This approach not only reduces the high video image dimension but also significantly cuts down training time, making it a practical solution for real-world applications.

“Our method effectively strengthens the optimization capability, allowing for better recognition of digits, letters, or words,” El Ogri explained. This advancement is particularly relevant for the agriculture sector, where clear communication is crucial for coordinating tasks, especially in environments with high ambient noise, such as tractor cabs or livestock barns.

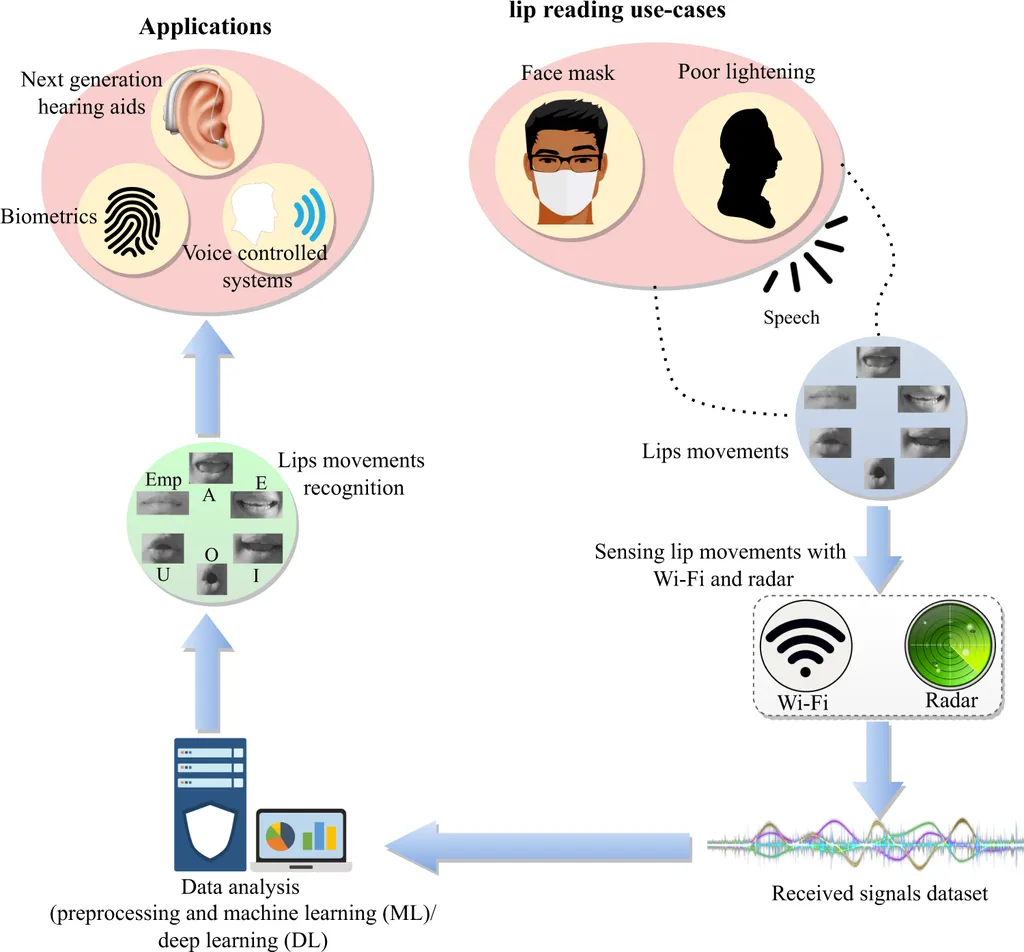

The proposed QCMs-PSO-CNN model incorporates optimized Quaternion Charlier Moments (QCMs) as a filter in the first layer of a custom-built shallow Convolutional Neural Network (CNN). This novel integration enhances the model’s recognition ability, making it a robust tool for visual speech recognition. The study evaluated the performance of the proposed method using three standard video datasets: GRID, LRW, and GLips, demonstrating excellent recognizing performance compared to other recently investigated approaches.

The implications for the agriculture sector are vast. Imagine a future where farmers can seamlessly communicate with autonomous machinery, drones, or other smart devices using lip-reading technology. This could streamline operations, reduce errors, and improve overall efficiency. “The proposed architecture found to classify the digits, letters, or words effectively,” noted El Ogri, highlighting the model’s potential to transform how we interact with technology in the field.

As the agriculture industry continues to embrace digital transformation, innovations like the QCMs-PSO-CNN model pave the way for more intuitive and efficient communication tools. This research not only advances the field of visual speech recognition but also opens up new possibilities for enhancing productivity and safety in agriculture. The study’s findings, published in the Ain Shams Engineering Journal, underscore the importance of interdisciplinary research in driving technological advancements that can benefit various sectors, including agriculture.

In the ever-evolving landscape of agritech, this breakthrough in visual speech recognition marks a significant step forward. As researchers continue to refine and expand the applications of this technology, we can expect to see even greater integration of intelligent systems in agricultural practices, ultimately shaping the future of farming.