In the ever-evolving landscape of precision agriculture, researchers are continually seeking innovative solutions to enhance crop monitoring and management. A recent study published in *Frontiers in Plant Science* introduces a novel approach that could significantly improve rice detection using unmanned aerial vehicle (UAV) remote sensing images. The research, led by Jianxiang Zhang from the College of Agronomy and Horticulture at Jiangsu Vocational College of Agriculture and Forestry, presents the Information Vortex-based progressive fusion YOLO (IV-YOLO) model, a breakthrough in individual plant-level detection.

Precision agriculture relies heavily on accurate and timely data to optimize crop management practices. However, traditional models often struggle with the challenges of adhered rice plant features and background interference in UAV images. “The adhesion of rice plant features and background clutter has been a persistent issue, making it difficult to achieve precise detection at the individual plant level,” explains Zhang. To address this, the IV-YOLO model employs a Multi-scale Spiral Information Vortex (MSIV) module, which uses multi-scale rotational kernel convolution and channel-spatial joint reconstruction to disentangle adhered features and decouple background clutter.

One of the standout features of the IV-YOLO model is its Gradual Feature Fusion Neck (GFEN). This component synergizes high-resolution details from shallow features, such as tiller edges and panicle textures, with the high semantic information of deep features. The result is a multi-scale feature representation that is both discriminative and complete. “By progressively fusing these features, we can generate more accurate and reliable detections,” Zhang adds.

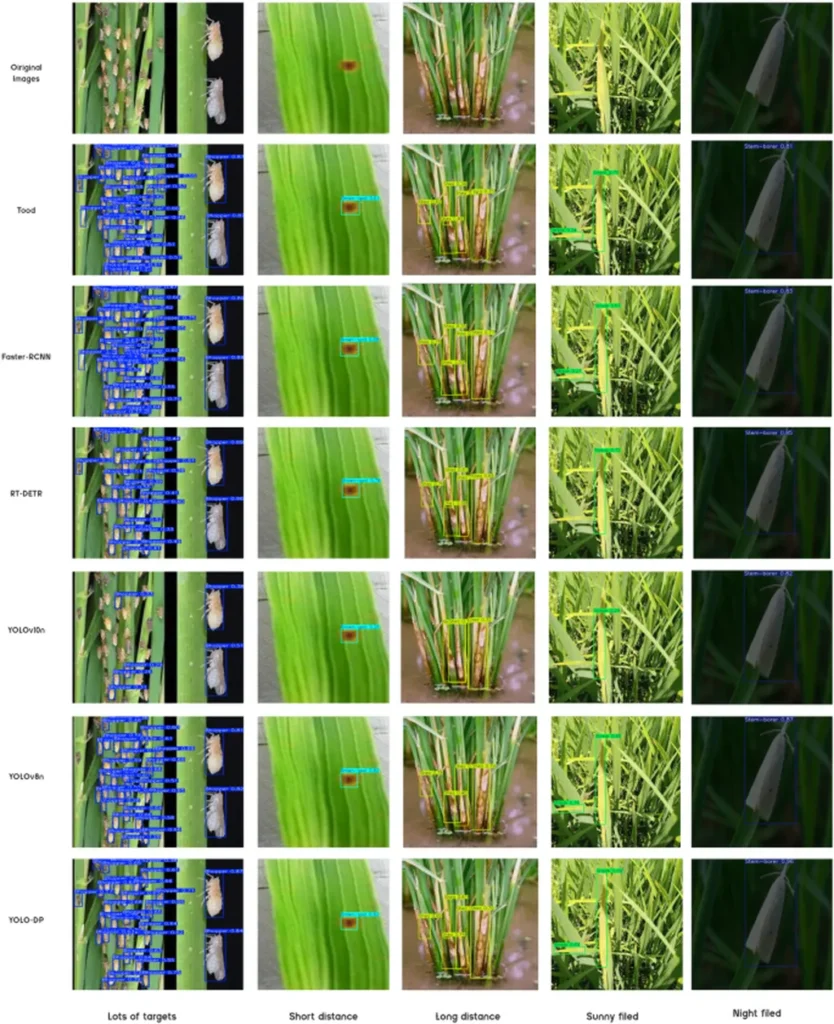

The efficacy of the IV-YOLO model was demonstrated through experiments conducted on the public DRPD dataset. The model achieved a precision of 0.8581, outperforming other state-of-the-art models like YOLOv5–YOLOv11 and FRPNet across all metrics. This level of accuracy is a game-changer for precision agriculture, as it enables farmers and agronomists to monitor rice crops at an unprecedented level of detail.

The commercial implications of this research are substantial. Accurate individual plant-level detection can lead to more targeted and efficient use of resources, such as water, fertilizers, and pesticides. This not only improves crop yields but also reduces environmental impact. “The ability to detect and monitor individual plants can revolutionize how we approach crop management,” says Zhang. “It allows for more precise interventions and optimizations, ultimately leading to higher productivity and sustainability.”

The IV-YOLO model’s success in rice detection opens up new possibilities for its application in other crops and agricultural contexts. As precision agriculture continues to evolve, the integration of advanced deep learning techniques like IV-YOLO will play a crucial role in shaping the future of the field. The research not only provides a reliable technical solution for rice monitoring but also paves the way for broader advancements in agricultural technology.

In the rapidly advancing world of agritech, innovations like the IV-YOLO model are setting new standards for precision and efficiency. As researchers continue to push the boundaries of what is possible, the agricultural sector stands to benefit immensely, driving towards a more sustainable and productive future.