In the arid expanses of desert farmlands, where sparse vegetation clings to life amidst varying soil colors and harsh shadows, precision agriculture faces unique challenges. A recent study published in the *Journal of Imaging* offers a promising solution to one of these challenges: accurate vegetation segmentation in drone imagery. Led by Thani Jintasuttisak from the Department of Computer Innovation and Digital Industry at Nakhon Si Thammarat Rajabhat University, the research presents a machine learning approach that combines HSV color-space representation with Gray-Level Co-occurrence Matrix (GLCM) texture features and Support Vector Machine (SVM) classification.

The study addresses a critical need in precision agriculture, where the ability to accurately segment vegetation from background elements is paramount. Traditional methods, such as spectral index methods like ExG and CIVE, often fall short in the complex conditions of desert environments. “The varying soil colors and strong shadows in desert farmlands make it difficult to distinguish vegetation accurately,” explains Jintasuttisak. “Our method aims to overcome these challenges by leveraging the strengths of HSV color space and GLCM texture features.”

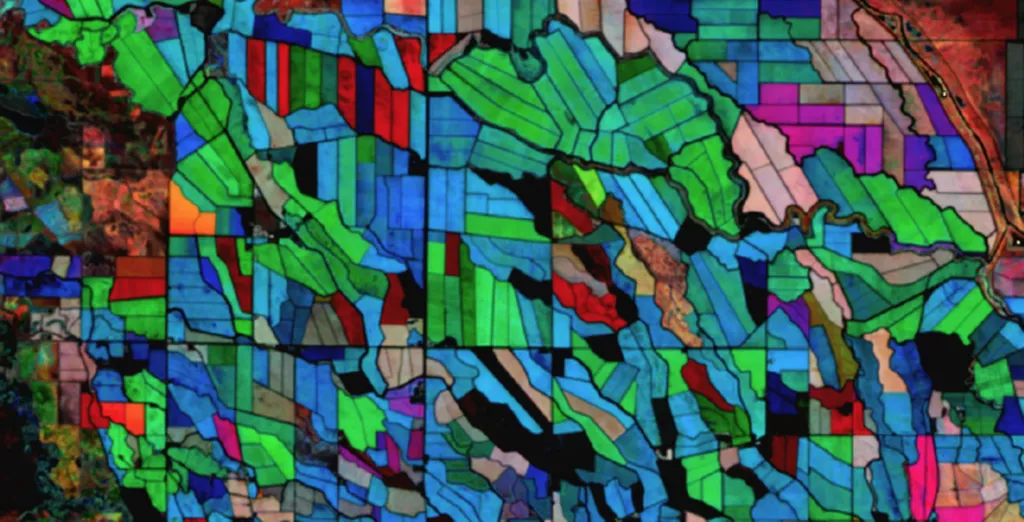

The proposed approach involves comprehensive preprocessing, including Gaussian filtering, illumination normalization, and bilateral filtering, followed by morphological post-processing to enhance segmentation quality. The method was evaluated on 120 high-resolution drone images from UAE desert farmlands, demonstrating superior performance compared to baseline methods. With an accuracy of 0.91, an F1-score of 0.88, and an Intersection over Union (IoU) of 0.82, the method shows significant improvement in handling the unique challenges of desert conditions.

The commercial implications for the agriculture sector are substantial. Accurate vegetation segmentation enables farmers to monitor crop health, optimize irrigation, and apply pesticides or fertilizers more precisely. This not only improves yield but also promotes sustainable farming practices by reducing waste and environmental impact. “Precision agriculture is about making data-driven decisions,” says Jintasuttisak. “Our method provides the accurate and reliable data needed to make these decisions effectively.”

The practical computational performance of the method, with a processing time of 25 seconds per image and a training time of 28 minutes, makes it suitable for real-world agricultural applications. While the method prioritizes accuracy over processing speed, the balance achieved is well-suited for the demands of modern farming.

Looking ahead, this research could shape future developments in the field by inspiring further innovations in machine learning and computer vision techniques tailored to agricultural needs. As drone technology continues to advance, the integration of such sophisticated segmentation methods could become a standard practice, revolutionizing how farmers manage their crops.

In the quest for sustainable and efficient agriculture, every technological advancement brings us closer to a future where precision and sustainability go hand in hand. This study, with its focus on overcoming the unique challenges of desert farmlands, is a testament to the power of innovative research in driving progress in the agriculture sector.